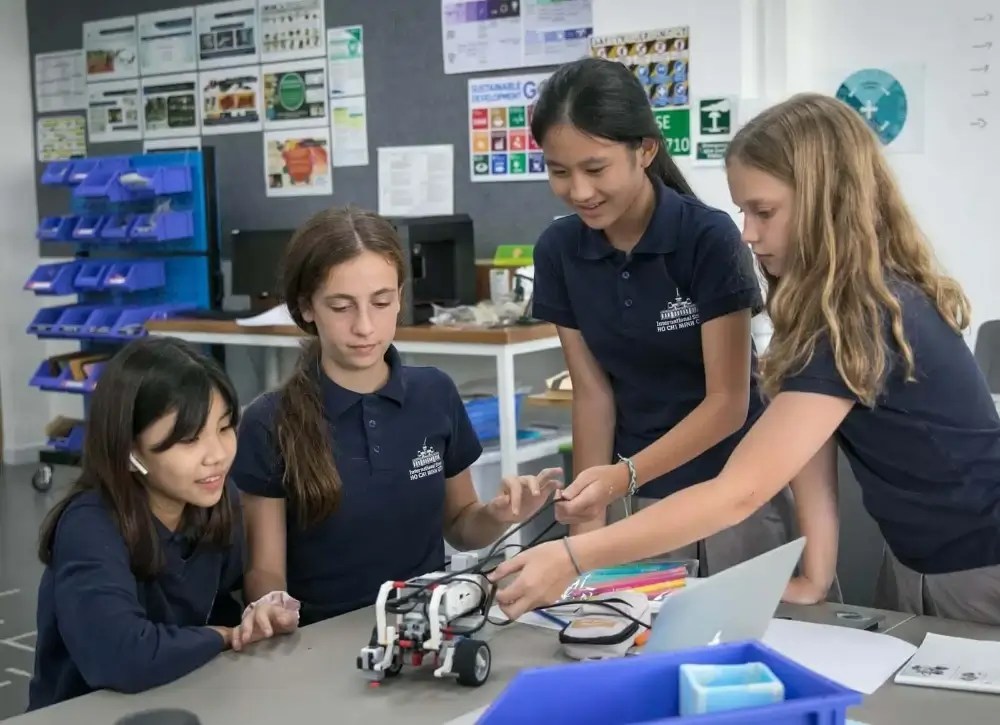

AI in Education at ISHCMC: Supporting Teachers, Empowering Learners, Managing Risks

Artificial Intelligence (AI) is increasingly present in many parts of our lives. At ISHCMC, we believe AI can amplify learning when used intentionally, ethically, and transparently. To do that, it’s essential to dispel common myths, acknowledge real risks, and promote how our students and teachers can use AI to support learning and creativity aligned with the IB philosophy.

Dispelling Common Myths About AI in Learning

Myth 1: “Innovative AI in Education will replace teachers.”

Reality: Great teaching remains deeply relational. AI may suggest practice questions, organize ideas, or annotate text, but it cannot build classroom culture, notice a student’s subtle hesitation, or mentor a child through challenges. In our view, AI is a co-pilot, not the pilot.

Myth 2: “Using AI in Education is cheating.”

Reality: Misuse of AI is cheating. But responsible use with disclosure and guidance can strengthen research, feedback, and creativity. At ISHCMC school, we want to guide students on when and how to cite AI assistance, just as they would a book or website.

Myth 3: “Using AI makes students lazy.”

Reality: Poorly used AI can shortcut thinking. Well-used AI extends thinking: generating multiple perspectives, simulating real-world scenarios, or brainstorming counter-arguments. We want to design tasks so that AI is the starting point for deeper inquiry and a meaningful part of the process, not just a quick way to the product.

Myth 4: “AI knows everything.”

Reality: AI tools can be confidently wrong, out-of-date, or biased. Just as with websites, our students need to learn to verify claims, check sources, and apply disciplinary thinking, core IB habits that matter even more.

The Real Risks We Take Seriously

- Academic integrity: AI can generate text that students didn’t write themselves. We can manage this risk through communicating and teaching expectations that clarify acceptable AI use in education, the monitoring process, and clearly stating how AI was used. There are also times for re-thinking assessment design (use of project-based approaches, oral defenses, process journals, drafts & reflections, etc.).

- Bias & fairness: AI outputs reflect hidden biases or skewed training data. As with any research, we can teach algorithmic and information literacy: “Whose data? Whose voice? What’s missing?” and cross-check with diverse sources.

- Privacy & safety: Some tools collect user data or store prompts on unknown servers. ISHCMC is fortunate to have been part of the Flint AI pilot that uses an age-appropriate, vetted platform. At the same time, we also want students to understand the goal of minimizing data sharing and how to keep personal or sensitive information safe.

- Over-reliance & shallow learning: If students lean on AI to do all the work, they risk shallow learning. We guard against this with rubrics or clear expectations that emphasize process, originality, evidence, and transfer, not just polished products.

- Well-being & screen time: Whether gaming, social media, or AI, we believe in balance for student well-being. Screen time for educational purposes is different from uncontrolled play time with technology. We coach a healthy balance that can include analogue first-drafting when appropriate and tech-free reflection, and taking breaks.

A Framework for Intentional AI Use at ISHCMC

As quickly as the world of AI is moving, all schools are still relatively new to integrating these tools meaningfully. There needs to be further training for students and staff on best practices of AI use in education. However, these are some areas that ISHCMC and Cognita are working towards:

Clear guardrails and routines when using AI in Education & Academics:

- AI-Use Statements: For major tasks, students include a short note: what tool they used, for what purpose (e.g., brainstorming, outlining), how they verified it, and what they changed.

- Declaration ≠ Disqualification: We normalize disclosure of AI use, so students are empowered to self-monitor rather than hide.

- Process over product: Oral conferences, design logs, and checkpoints ensure the student’s understanding, not the tool drive the grade.

Age-appropriate progression:

- PYP (Primary Years Programme): Curiosity first. Teachers lead a whole-class demo: “Let’s ask an AI to suggest ways to sort animals. Now let’s check with books and our observations.” At times, with specific tools like Toddle AI tutors or Flint AI activities, students might have a supervised, guided task with AI as a component.

- MYP (Middle Years Programme): Structured use. Students craft prompts, check bias, summarize vs. synthesize, and cite AI as a source of ideas, not an authority.

- DP (Diploma Programme): Discipline-specific application. For example, in Design, students might use AI to propose multiple design directions, then justify their final choice with user feedback and design criteria; in Language & Literature, students may use AI to generate counter-readings they then critique with textual evidence.

Creativity and design, not copy-paste:

- In Digital Design, students might ask AI to help them understand different cuisines to create an entirely new fusion food that they’ll cook in Food Tech and refine their final dish based on criteria.

- In Language & Literature, students might ask AI for three alternative interpretations of a poem, then evaluate each against their own reading and analysis.

- In Science, students can simulate variables with AI-assisted tools to plan investigations, then collect real data and evaluate discrepancies and limitations.

Teacher development & review:

- ISHCMC runs ongoing professional learning on AI pedagogy, safe-use policies, and task redesign.

- We regularly review tools for privacy, accuracy, accessibility, and cost, and retire tools that don’t meet our standards.

Empowering Educators & Learners Through Ongoing Learning

As part of our commitment to safe and purposeful technology, ISHCMC uses Flint AI, a platform built specifically for education. Unlike open-ended chatbots that prioritize quick answers, Flint is designed around inquiry, reflection, and processes the same learning values that guide our IB framework. Its structure encourages students to ask better questions, test their reasoning, and document their thinking journey rather than simply receiving information.

Flint’s classroom-ready features support this emphasis on learning:

- Guided inquiry prompts that help students refine questions and approach topics from multiple perspectives.

- Draft-and-feedback tools that let students see how their ideas evolve, promoting metacognition and self-management.

- Teacher visibility dashboards that allow educators to view student prompts and revisions, offering insight into how students think, not just what they produce.

- Secure, education-grade privacy controls that keep student data protected and interactions age-appropriate.

These features connect directly to our Approaches to Learning (ATL) skills. When students use Flint AI, they’re practicing communication (asking clear, purposeful questions), research (evaluating and refining evidence), thinking (analyzing AI responses critically), and self-management (tracking progress and reflection). The platform turns AI into a thinking partner that supports curiosity, creativity, and principled decision-making, helping students become more reflective and independent learners.

A Simple Framework To Teach and Practise

Ultimately, when we invite AI into a classroom task, we would like to guide students and staff through a six-step process:

- Purpose: Why am I using AI here?

- Prompt: Am I asking a clear, specific question?

- Probe: What seems off or missing?

- Proof: What evidence supports or challenges this output?

- Personalize: How do I transform this into my thinking and voice?

- Publish ethically: Have I declared and cited AI assistance?

Our Commitment

At ISHCMC, our stance is simple but will take time to fully implement: AI should expand human potential, not replace it. We commit to equipping learners to be ethical, discerning, and creative users of powerful tools. We’ll continue refining our practices so that our students graduate not only with strong content knowledge but with the agency and confidence to use AI thoughtfully and responsibly in a world that will demand it.